Everyday we deal with an increasing traffic, coming from and to ToolsLib. These last weeks have been interesting to follow since we moved to a larger scale a bit earlier than expected, but the results we get are very positive. We want to share some figures, graphs and explanations about how we deal with an important traffic and increasing data quantity on our infrastructure.

Frontend

With several hundred of thousands of downloads each day, we deliver an important outbound traffic. We were used to deal with less than a continuous 100 Mbps flow, but this time seems behind us. We now deal with at least the double, and with more than 300Mbps at peak time.

Hence, we now have a 2x1Gbps of outbound traffic capacity.

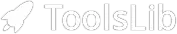

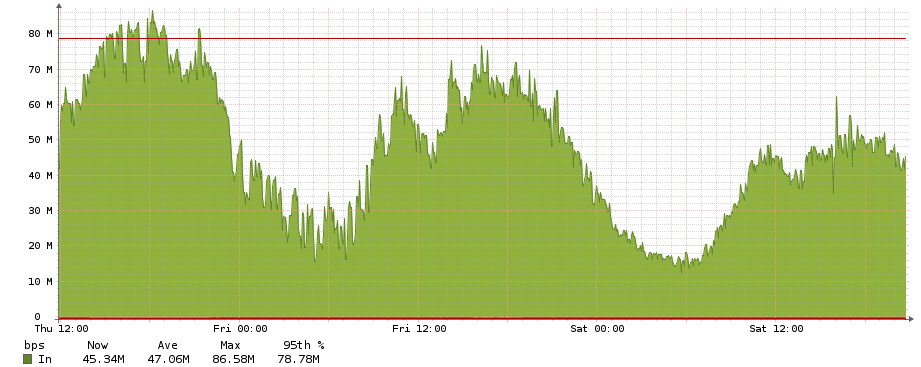

The outbound traffic since thursday midday on one of our frontend.

This graph only shows the traffic on one frontend (two are active at the time of this article, but it's evolving depending on the frequentation) when there wasn't an exceptional high frequentation on the website, and they all nearly send the same amount of traffic.

The inbound traffic brings more delicate issues to manage : the whole platform is available with HTTPS (check for the green lock on the top of your browser !) to secure your navigation. We do not enforce it, you can still browse the platform in cleartext (but we discourage it). Delivering content through HTTPS is not free at all. It's not so much visible at very low scale, but it quickly brings huge loads and latencies if it's not well managed at more high scale (some of our frontends were saturated during the first migration because of that).

After a few measurements, we currently have a ratio of 1 visitor in HTTP for 4 visitors in HTTPS, and between a few dozens to up than 1200 requests per second (for the HTTPS visitors), so the optimization of our SSL/TLS stack is really important .

That's why we carefully choose our available ciphers with as criteria :

- security first,

- compatibility with most browsers,

- speed.

Our supported ciphers is as follow :

ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:DHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-SHA256:DHE-RSA-AES128-SHA256:DES-CBC3-SHA

We prefer elliptic curves based key-exchange (ECDHE_ECDSA) since they provide the equivalent level of security with shorter key, so the exchanged data quantity is lower and the computation cost is not as high as with DHE (we observed almost x2,5 of computation costs between ECDHE and DHE based ciphers).

The last cipher "DES-CBC3-SHA" is only needed for old configurations compatibility (especially Windows XP). It doesn't provide any Forward Secrecy and should be avoid as much as possible, but it's the only way we can provide to our XP users (they still represent 6% of our visitors in 2015) an HTTPS navigation. We encourage them to migrate as soon as possible to a more up-to-date system.

We also support sessions caching and resumption to lower the number of handshake trips. With other basics optimizations, we now easily handle the incoming traffic even with rapid increases like we have when a software is updated.

Backend

Behind this front part, our new backend is also designed to support such quantity of forwarded traffic : the whole backend network uses redundant links of 1 Gbps with Jumbo Frames (and a MTU of 9000 bytes) to improve performance (the benefits is mostly seen for NFS mounts, thus website rendering).

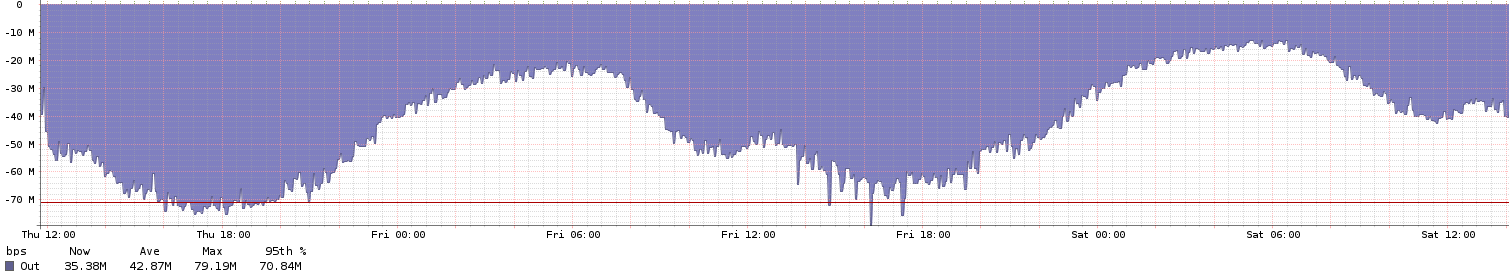

The outbound traffic since thursday midday on one of our web backend.

Moreover, there's no more hard-drive in our machines (except for backup servers). The production servers are only equiped with SSD to once again reduce the latency.

The database part is also impacted by the quantity of data handled. Our databases server currently store about 60 GB of data, and it increase by more than 5 GB per month.

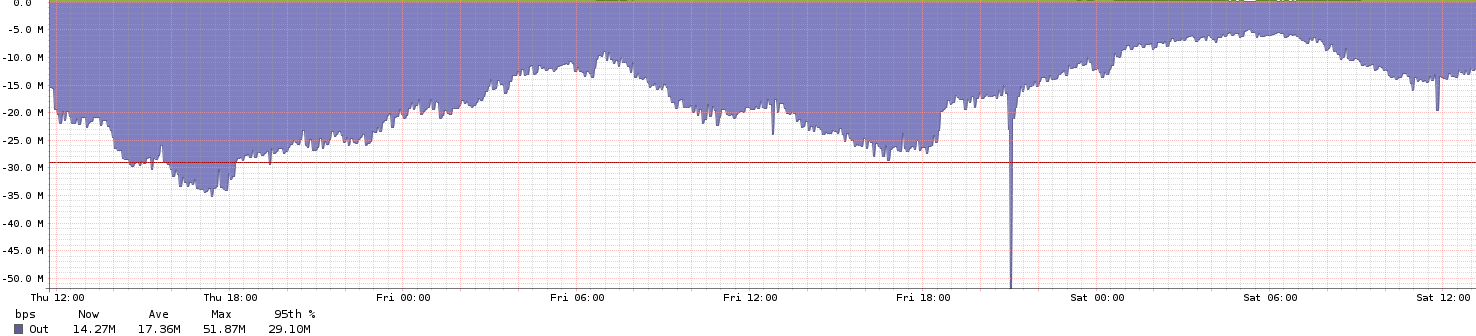

We also see these figures on our MariaDB servers with around 20Mbps of data throughput in a calm period.

The outbound traffic since thursday midday on one of our MariaDB server.

The website rendering has been improved thanks to this redesign : the page loading has been dropped by 10 to 20 ms compared to the older infrastructure. It will even be better in a near future when we'll be able to support HTTP/2. The download throughput has also been improved a lot, and you probably felt it.

However, even if we obtains these benefits mainly thanks to the low latency network, the infrastructure is also present in several datacenters, seperated by more than 200 Km. The other locations are used for other services and fallback for the main infrastructure in case of outage.

We'll come back with other articles about our metrics (for example the ratio of 1/5 between ipv6 and ipv4 users we have) in the future. In the meantime, don't forget to check our status page to follow our system maintenances and evolutions!